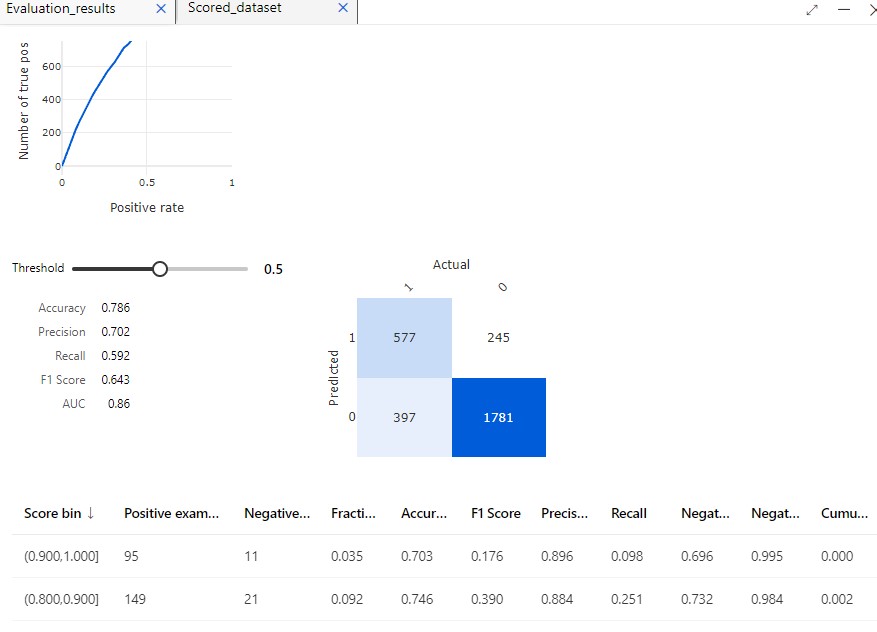

While deep diving to Azure Machine Learning Studio, I have been also exploring the performance metrics in Azure Machine Learning specifically for classification ML models.

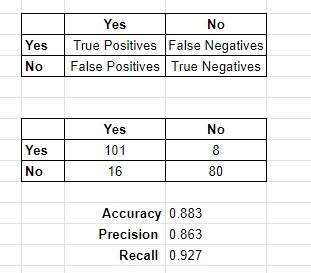

Confusion matrix is one of the key performance metric to evaluate classification machine learning models. It shows the distribution of outcomes predicted by your model.

Accuracy is the proportion of true results to the total.

Accuracy = (True Positives + True Negatives) / TOTAL

Precision is the true results over all positive results.

Precision = True Positives / (True Positives + False Positives)

Recall is the total amount of relevant instances that were actually retrieved.

Recall = True Positives / (True Positives + False Negatives)

*F1 score * is computed as the weighted average of precision and recall between 0 and 1, where the ideal F1 score value is 1.

Area under the Receiver Operating Characteristic Curve measures the quality of the model's predictions irrespective of what classification threshold is chosen. Closer to 1 is a good target.

Reference: https://docs.microsoft.com/en-us/azure/machine-learning/component-reference/evaluate-model

Let's use this in an example simplified Yes or No classification scenario where we have below results:

As for these metrics, you do not have to manually calculate them at all. You can use Evaluate model component in Azure ML Studio and check all results.