A brief look into Agentic Design Patterns

Over the past year, AI has shifted from simple prompt–response chatbots to agentic systems that can plan, act, use tools, and coordinate across complex workflows. Instead of treating the model as a passive text generator, developers now orchestrate it as an active decision-maker inside software systems.

But as the field accelerates, teams are converging on a shared vocabulary: Agentic AI Design Patterns.

These patterns are the reusable “architectural shapes” that define how an AI agent thinks, reasons, acts, and interacts with humans and external systems.

Why Agentic Patterns Matter

While language models are powerful, the real magic happens in the orchestration layer—how we structure prompts, tool calls, planning cycles, and feedback loops.

Patterns give us:

- A blueprint for building safer, more predictable agent behavior

- Reusable mental models that reduce design complexity

- A common language for teams designing autonomous workflows

- A way to compare cost, latency, and reliability trade-offs

Think of them the way we think about microservices, MVC, or event-driven architectures and patterns that guide effective application design.

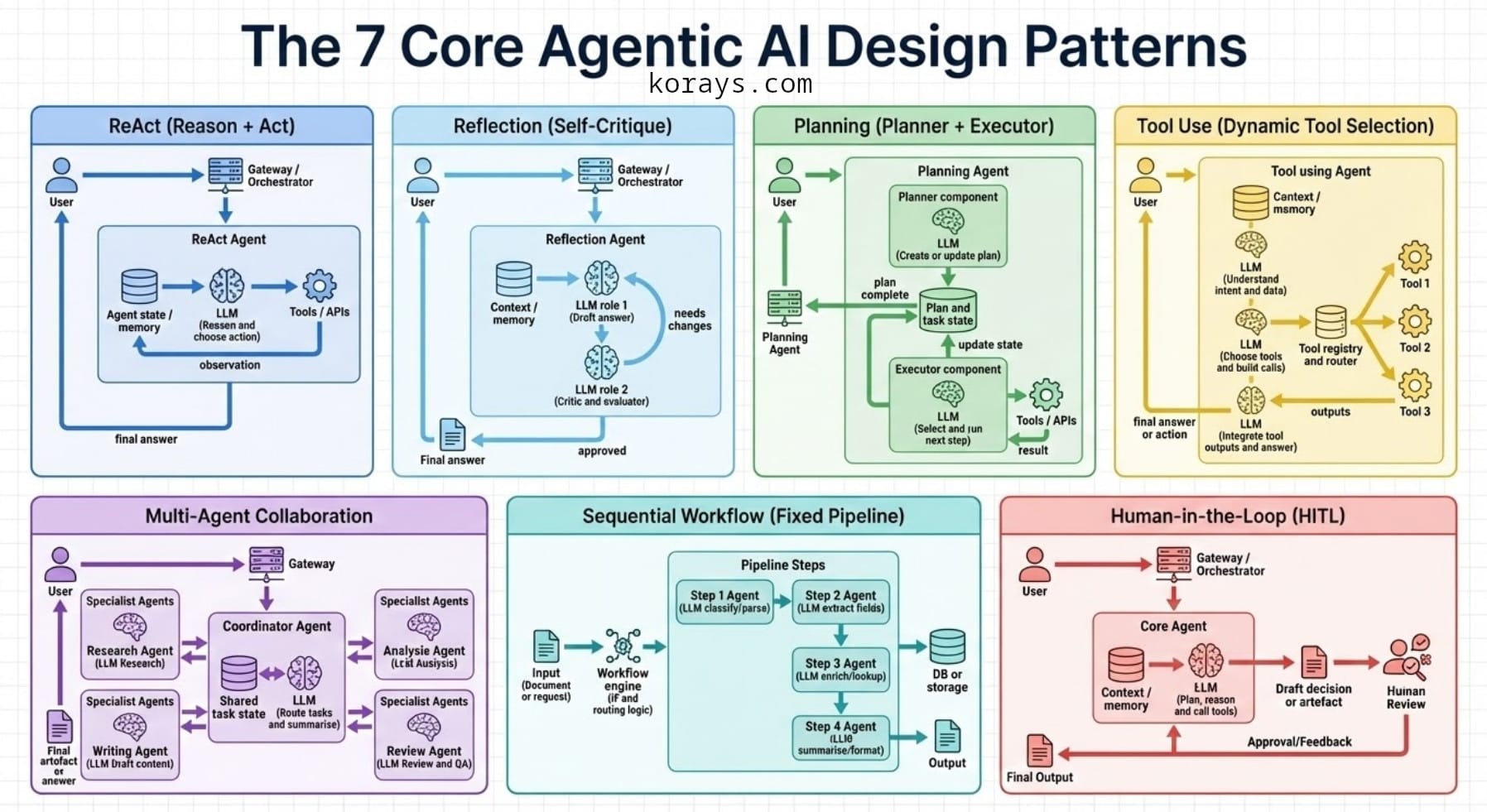

The 7 Core Agentic AI Design Patterns

1. ReAct (Reason + Act)

Agents alternate between reasoning and taking actions:

Thought > Action > Observation > New Thought > Action

Use when

- The problem is open-ended or investigative

- The agent must adapt step-by-step

- Tasks involve search, debugging, research, or troubleshooting

Watch out for

- High token consumption and higher latency

- Harder to trace if something goes wrong

2. Reflection (Self-Critique)

The agent writes an answer, then switches into reviewer mode and critiques or rewrites its own work.

Use when

- Quality is more important than speed

- You’re dealing with factual content, analysis, reports, or code

- Reducing hallucinations and errors is essential

Watch out for

- Multiple passes = more time and cost

- Agents can become overly conservative without good evaluation criteria

3. Planning (Task Decomposition)

The agent creates a plan before execution: breaking a task into milestones, dependencies, or subtasks.

Use when

- Tasks span multiple steps or domains

- A workflow has dependencies and ordering

- You need repeatability and structure

Watch out for

- Overkill for simple queries

- Plans must be revisited mid-run; static plans may drift

4. Tool Use (API and System Integration)

The agent chooses tools, search APIs, calculators, databases, CRMs, code execution and uses them to act in the world.

Use when

- Real-time data or system interaction is required

- You need reliability or transparency

- The agent must take meaningful action beyond writing text

Watch out for

- Tool routing logic is a new layer of engineering

- Errors, rate limits, and tool misalignment can break workflows

5. Multi-Agent Collaboration

A “team” of specialized agents coordinated by an orchestrator.

Examples: researcher → analyst → writer → reviewer.

Use when

- Tasks require specialization (legal, financial, technical)

- You want modularity and reusable roles

- You’re building large end-to-end workflows

Watch out for

- More moving parts, more failure points

- Longer latency and higher costs

6. Sequential Workflow (Fixed Pipelines)

A linear or branching pipeline where each step feeds the next. Agents may or may not appear at every stage.

Use when

- The workflow is predictable and repeatable

- You’re building document pipelines, ETL flows, or form-processing sequences

Watch out for

- Rigid; cannot adapt dynamically

- Not ideal for ambiguous or unstructured problems

7. Human-in-the-Loop (HITL)

The agent runs until it reaches a checkpoint (approval, decision, or validation), then pauses for a human to review.

Use when

- The domain is high-stakes (healthcare, finance, governance)

- Legal or compliance oversight is required

- You want a “safety valve” on autonomy

Watch out for

- Requires UX and operations support

- Slower end-to-end, but dramatically safer

Comparing the Patterns

Here is a simplified overview for readers:

| Pattern | Autonomy | Best For | Strength | Trade-off |

|---|---|---|---|---|

| ReAct | High | Investigation, debugging, search | Adaptability | High cost & latency |

| Reflection | Medium | Writing, code, analysis | Quality control | Extra passes |

| Planning | High | Complex multi-step tasks | Structure & repeatability | Over-engineering risk |

| Tool Use | High | Real-world actions | Fresh data, reliability | Tool maintenance |

| Multi-Agent | Very High | Cross-domain workflows | Specialization | Orchestration complexity |

| Sequential Workflow | Low–Med | Stable pipelines | Predictability | Inflexible |

| Human-in-the-Loop | Medium | Regulated or high-risk domains | Safety | Slower |

A key insight: Most real systems combine patterns.

For example:

- Planning + ReAct + Tool Use → AI analyst that researches and retrieves data

- Sequential Workflow + Reflection → Document automation with QC

- Multi-Agent + HITL → Full enterprise workflow with checkpoints

Practical Guidance: How to Choose a Pattern

Start simple.

If a single-agent ReAct loop solves the problem, don’t deploy 6 specialized roles.

Map patterns to business constraints.

- Latency-sensitive? Avoid heavy Reflection cycles.

- Compliance-heavy? Bring HITL into the loop.

- Long-running? Use Planning or Multi-Agent structures.

Evaluate cost early.

Agentic systems multiply model calls; optimizing one step often cuts 60–80% of your bill.

Invest in observability.

Logs, traces, reasoning steps, tool outputs — these are your debugging lifeline.

Outlook: Where Agentic Patterns Are Heading

1. Opinionated, end-to-end agent frameworks

Developers are moving from orchestration “from scratch” to using frameworks like:

- OpenAI Agents SDK

- LangGraph (LangChain’s graph-based agent engine)

- AutoGen

- crewAI

These tools bundle patterns directly into the runtime.

2. Standardized tool interfaces (MCP)

The Model Context Protocol (MCP) is rapidly becoming the “USB port” for connecting agents to external tools, databases, and enterprise systems.

3. Autonomy with boundaries (“Hybrid Autonomy”)

Future agents will be able to escalate to humans when uncertain, ask clarifying questions, or pause when risk is detected.

4. Evaluation and safety as first-class citizens

Agent evaluation will mature into a discipline of its own, with benchmarks, simulations, and automated red-teaming.

5. Multi-agent ecosystems

Instead of running one agent in isolation, enterprises will deploy fleets of interoperable agents across departments.

Conclusion

Agentic AI is moving from experimentation to architecture.

Understanding design patterns is key to building systems that are safe, reliable, and economically viable.

As models improve and frameworks mature, these patterns will converge into a shared architectural language for intelligent, autonomous software.